GitLab: Helm chart of values, dependencies, and deployment in Kubernetes with AWS S3

We continue our engagement with GitLab and its deployment in Kubernetes. The first part — GitLab: Components, Architecture, Infrastructure, and Launching from the Helm Chart in Minikube, and now let’s get ready to deploy to AWS Elastic Kubernetes Service.

What will we do and where:

- deploy to AWS from the Helm-chart, with some test env

- Kubernetes — AWS EKS

- object store — AWS S3

- PostgreSQL — with the operator

- Redis — for now, we will use the default one from the chart, then we will switch to KeyDB, which is also deployed by the operator

- Gitaly — let’s try it in a cluster, maybe on a dedicated node — we only have 150–200 users, the load should not be large, scaling and especially Praefik are not needed

GitLab Operator looks interesting in general, but in the documentation a lot of “Experimental” and “Beta” words, so we don’t touch it yet.

Helm chart prerequisites

Regarding the chart: first, we need to go through the available parameters of the chart, and see what we will need from external resources (load balancers, S3 buckets, PostgreSQL), and what else can be configured through its values.

Don’t forget to look at the GitLab chart prerequisites, from which we are interested in the following sections:

- PostgreSQL: we will use the operator, and deploy our cluster with databases

- Redis: We have a KeyDB operator, then we will use it, but for now, let’s use the default from the chart

- Networking and DNS: we are using AWS ALB Controller, and will create a certificate with AWS Certificate Manager, DNS served by the Route53, records are created through External DNS

- Persistence: an interesting nuance, it may be necessary to configure reclaimPolicy to Retain, see Configuring Cluster Storage

- Prometheus: also a point to think about as we have Kube Prometheus Stack with its Prometheus and Alertmanager, so we will have to think about whether to disable the built-in GitLab or leave

- Outgoing email: we will not configure it yet, but will have to think about it later. There is support for AWS SES, I met it somewhere in the documentation, so don’t expect issues here

- RBAC: we have it, and it is supported, so we leave it by default, that is, turn it on

We have to take into account that the chart includes a whole bunch of dependencies, and it is useful to go through them and see what else is deployed there and with what parameters.

The Helm chart structure and its values.yaml

The GitLab Helm chart has a complex structure of its values, as the chart includes a set of sub-charts and dependencies, see GitLab Helm subcharts.

To visualize its values structure, you can look at the structure of the charts directory with child charts:

$ tree -d gitlab/charts/

gitlab/charts/

| — certmanager-issuer

| ` — templates

| — gitlab

| | — charts

| | | — geo-logcursor

| | | ` — templates

| | | — gitaly

| | | ` — templates

| | | — gitlab-exporter

| | | ` — templates

| | | — gitlab-grafana

| | | ` — templates

| | | — gitlab-pages

| | | ` — templates

| | | — gitlab-shell

| | | ` — templates

| | | — kas

| | | ` — templates

| | | — mailroom

| | | ` — templates

| | | — migrations

| | | ` — templates

| | | — praefect

| | | ` — templates

| | | — sidekiq

| | | ` — templates

| | | — spamcheck

| | | ` — templates

| | | — toolbox

| | | ` — templates

| | ` — webservice

| | ` — templates

| | ` — tests

| ` — templates

| — minio

| ` — templates

| — nginx-ingress

| ` — templates

| ` — admission-webhooks

| ` — job-patch

` — registry

` — templatesIn addition, there is a set of external dependencies, see requirements.yaml.

Accordingly, in the values of the main chart parameters are divided into global (see Subcharts and Global Values) used by child charts, and parameters for specific charts, see Configure charts using globals, that is, ours values.yamlwill look like this:

global:

hosts:

domain:

hostSuffix: # used in the ./charts/gitlab/charts/webservice, ./charts/gitlab/charts/toolbox/, ./charts/registry/, etc

...

gitlab:

kas:

enabled: # used in the ./charts/gitlab/charts/kas/

...

nginx-ingress:

enabled: # used in the ./gitlab/charts/nginx-ingress

...

postgresql:

install: # used durin the extrnal postgresql chart deploymentNow let’s look at the values themselves, and which can be useful to us.

Helm chart values

The “starting point” for working with the chart is Installing GitLab by using Helm.

It’s still a good idea to check the default values.yaml, there are links to services/parameters in the comments.

Actually, the parameters that may be interesting for us, see more in the Configure charts using globals and GitLab Helm chart deployment options:

global.hosts:domain: specify the domain in which records for GitLab will be createdexternalIP: it seems to be mandatory, but since we will have AWS ALB, we'll not use ithostSuffix: test – will add a suffix to the subdomains being created, and we will get a record of the form gitlab-test.example.comhttps: true, will be ALB from AWS Certificate Managerssh: here we will need to set a separate subdomain to access GitLab Shellglobal.ingress: since we have AWS ALB, seealb-full.yamlannotations.*annotation-key*: add annotations for ALBconfigureCertmanager: will have to be disabled, as SSL will be terminated on the load balancertls.secretName: we do not need it, as we will terminate SSL on the load balancerpath: will be required for ALB in the form of/*provider: for ALB == awsglobal.gitlabVersion: thought to specify here the version of GitLab that we will deploy, but it turned out not, as it is more correct to do through the version of the chart, see GitLab Versionglobal.psql: parameters for connecting to PostgreSQL by GitLab services, see Configure PostgreSQL settingshost: address of PostgreSQL Servicepassword:useSecret: we will save the password in a Secretsecret: the Secret namekey: the key/field in the Secret by which we get the passwordglobal.redis: in general, it will be external, KeyDB, and here it will be necessary to specify the parameters of access to it, but for now, we will leave the default one, see Configure Redis settingsglobal.grafana.enabled: enable it to see what dashboards are there (well, it will still need to be configured separately, see Grafana JSON Dashboards )global.registry: see Using the Container Registrybucket: it will be necessary to create an S3 bucket, this parameter is used in the/charts/gitlab/charts/toolbox/for backups, the GitLab Registry itself is configured through separate parameters, we will consider belowglobal.gitaly: for now, we will use Gitaly from the chart, despite the recommendations - we will deploy in the form of Kubernetes Pod in a cluster, leave it with the defaults, but keep it in mind for futureglobal.minio: disable - use AWS S3 hereglobal.appConfig: there are a lot of things here, see Configure appConfig settings, it contains common settings for Webservice, Sidekiq, and Gitaly chartscdnHost: in general, it seems to be a useful thing, but let's see how to use it before we touch itcontentSecurityPolicy: also a useful thing, but we will configure it later, see Content Security PolicyenableUsagePing: the "telemetry" for GitLab Inc itself, I don't see the point, let's turn it offenableSeatLink: I didn't understand what it is, the seat link support link leads to "nowhere" - I didn't find information about seat on the page, but it's probably something related to the number of users in the license, and since we don't buy it, it can be turned offobject_store: general parameters for working with S3 access keys and proxies, see Consolidated object storageconnection: here you will need to create a secret that describes the settings for connecting to the baskets, including ACCESS/SECRET keys, but we will use ServiceAccount and IAM role instead of keys- Specify buckets: which buckets are needed, there is a set of default names like gitlab-artifacts, but for dev- and test- it makes sense to override

storage_options: encryption for buckets, it makes sense to change if using AWS KMS, but we'll probably leave the default encryption- LFS, Artifacts, Uploads, Packages, External MR diffs, and Dependency Proxy — basket settings for various services, let’s not touch it yet, wll see how it works

gitlab_kas: settings for GitLab Agent for Kubernetes, we'll leave it at the default for now, not sure we'll need itomniauth: settings for SSO, somewhen later, we will generally connect authentication via Google, see OmniAuthglobal.serviceAccounts: instead of ACCESS/SECRET, we will use Kubernetes ServiceAccounts with IAM Role for pods :create: include the creation of SA for servicesannotations: and here we specify the ARN of the IAM roleglobal.nodeSelector: useful, then we will move GitLab Pods on a dedicated set of WorkeNodesglobal.affinity&&global.antiAffinity: also, you can configure Affinityglobal.common.labels: let's set here some environment, team, etc labelsglobal.tracing: not touching yet, but we have in mind for the future - then we will add some Jaeger/Grafana Tempoglobal.priorityClassName: maybe useful or even necessary, will come back to it later, see Under Priority and Preemption

This is almost all of the global variables, except for them we will need to:

- disable the

certmanager- we use AWS Certificate Manager on AWS LoadBalancer - turn off the

postgresql- we will use an external one - disable the

gitlab-runner- we already have runners running, we'll try to connect them to this GitLab instance - disable the

nginx-ingress- we have AWS ALB Controller and AWS ALB balancers - configure Services for the

webserviceandgitlab-shell - configure the

registry

Now, before deploying the chart, we need to prepare external services:

- PostgreSQL cluster and database

- AWS S3 buckets

- certificate in AWS Certificate Manager for load balancers

Preparation — the creation of external resources

PostgreSQL

We are using the PostgreSQL Operator, so here we need to describe the creation of a cluster.

The parameters for connections, memory, disk, and request-limits are default for now — we’ll see how much resources the cluster will require

Describe the creation of the gitlabhq_test database and default users — defaultUsers=true:

kind: postgresql

apiVersion: acid.zalan.do/v1

metadata:

name: gitlab-cluster-test-psql

namespace: gitlab-cluster-test

labels:

team: devops

environment: test

spec:

teamId: devops

postgresql:

version: "14"

parameters:

max_connections: '100'

shared_buffers: 256MB

work_mem: 32MB

numberOfInstances: 3

preparedDatabases:

gitlabhq_test:

defaultUsers: true

schemas:

public:

defaultRoles: false

enableMasterLoadBalancer: false

enableReplicaLoadBalancer: false

enableConnectionPooler: false

enableReplicaConnectionPooler: false

volume:

size: 10Gi

storageClass: encrypted

resources:

requests:

cpu: "100m"

memory: 100Mi

limits:

memory: 1024Mi

enableLogicalBackup: true

logicalBackupSchedule: "0 1 * * *"

sidecars:

- name: exporter

image: quay.io/prometheuscommunity/postgres-exporter:v0.11.1

ports:

- name: exporter

containerPort: 9187

protocol: TCP

resources:

limits:

memory: 50M

requests:

cpu: 50m

memory: 50M

env:

- name: DATA_SOURCE_URI

value: localhost/postgres?sslmode=disable

- name: DATA_SOURCE_USER

value: "$(POSTGRES_USER)"

- name: DATA_SOURCE_PASS

value: "$(POSTGRES_PASSWORD)"

- name: PG_EXPORTER_AUTO_DISCOVER_DATABASES

value: "true"Create a namespace:

$ kk create ns gitlab-cluster-testDeploy the cluster:

$ kk apply -f postgresql.yaml

postgresql.acid.zalan.do/gitlab-cluster-test-psql createdCheck the Pods:

$ kk -n gitlab-cluster-test get pod

NAME READY STATUS RESTARTS AGE

devops-gitlab-cluster-test-psql-0 2/2 Running 0 24s

devops-gitlab-cluster-test-psql-1 0/2 ContainerCreating 0 4sOkay, they are being created.

Check the Secrets — later we use the secret of the postgres user in the GitLab configs:

$ kk -n gitlab-cluster-test get secret

NAME TYPE DATA AGE

default-token-2z2ct kubernetes.io/service-account-token 3 4m12s

gitlabhq-test-owner-user.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do Opaque 2 65s

gitlabhq-test-reader-user.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do Opaque 2 65s

gitlabhq-test-writer-user.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do Opaque 2 66s

postgres-pod-token-p7b4g kubernetes.io/service-account-token 3 66s

postgres.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do Opaque 2 66s

standby.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do Opaque 2 66sLooks OK.

AWS S3

Now let’s create buckets.

We will need:

- for the registry — Configure Registry settings

- for backups — Backups to S3

- and a bunch for LFS, Artifacts, Uploads, Packages, External MR diffs, and Dependency Proxy

To the names of the buckets add or as our own “identifier”, because the names of the baskets are common to avoid the BucketAlreadyExists error, and the name of the environment "test", so the list comes out like this:

- or-gitlab-registry-test

- or-gitlab-artifacts-test

- or-git-lfs-test

- or-gitlab-packages-test

- or-gitlab-uploads-test

- or-gitlab-mr-diffs-test

- or-gitlab-terraform-state-test

- or-gitlab-ci-secure-files-test

- or-gitlab-dependency-proxy-test

- or-gitlab-pages-test

- or-gitlab-backups-test

- or-gitlab-tmp-test

Not sure that we will need all of them, will see in during setting up the GitLab and its features, but for now let’s have all of them.

Create with the AWS CLI:

$ aws --profile internal s3 mb s3://or-gitlab-registry-test

make_bucket: or-gitlab-registry-testRepeat for the rest of the buckets.

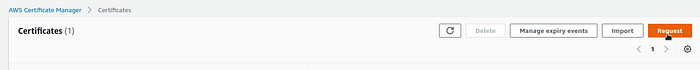

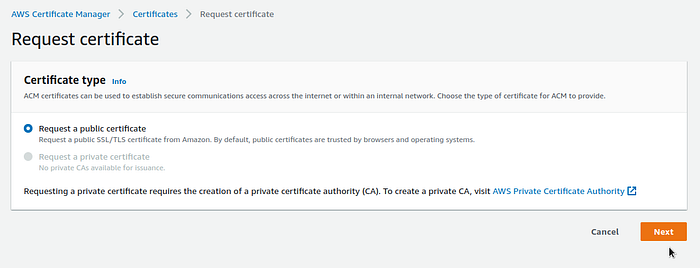

AWS Certificate Manager

For Ingress we need a TLS certificate, see Requesting a public certificate, and to receive a certificate — obviously, the domain.

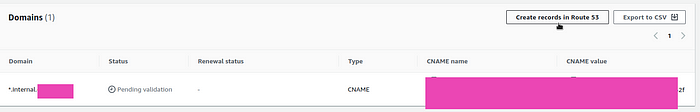

In this case, we will use internal.example.com, for which we will create a wildcard certificate in ACM:

In the FQDN, specify *.internal.example.com to include all subdomains. Then we will use the parameters global.hosts.domain=internal.example.com and hostSuffix=test, which will create several Ingress and Services, which will create the necessary records in Route53 through ExternalDNS.

In the Validation Method, select DNS — the simplest way, especially since the domain zone is hosted in Route53 and everything can be created in a couple of clicks:

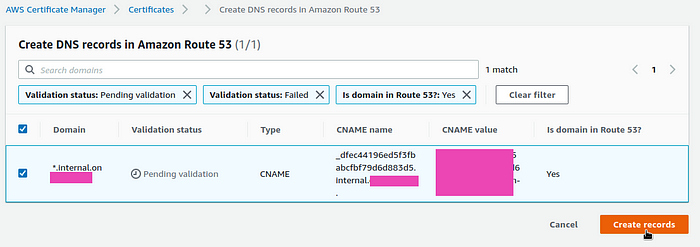

Let’s go to the certificate — it is now in the Pending validation state, click on the Create records in Route53 button:

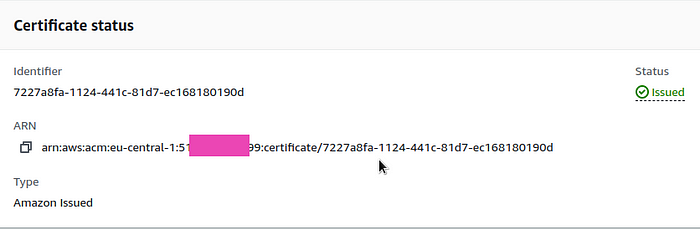

Now its status changed to Issued, save its ARN — we will need it in our values:

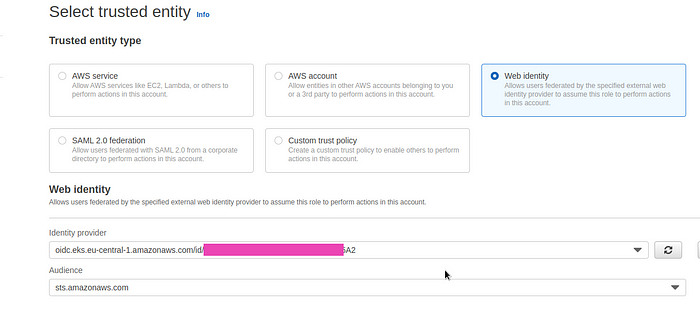

AWS IAM Policy and IAM Role for ServiceAccount

Next, we need to create an IAM Policy and an IAM Role for the ServiceAccount that will provide access to AWS S3. For details, see Kubernetes: ServiceAccount from AWS IAM Role for Kubernetes Pod, here’s a quick one.

Create the policy:

{

"Statement": [

{

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListMultipartUploadParts",

"s3:AbortMultipartUpload"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::or-gitlab-registry-test/*",

"arn:aws:s3:::or-gitlab-artifacts-test/*",

"arn:aws:s3:::or-git-lfs-test/*",

"arn:aws:s3:::or-gitlab-packages-test/*",

"arn:aws:s3:::or-gitlab-uploads-test/*",

"arn:aws:s3:::or-gitlab-mr-diffs-test/*",

"arn:aws:s3:::or-gitlab-terraform-state-test/*",

"arn:aws:s3:::or-gitlab-ci-secure-files-test/*",

"arn:aws:s3:::or-gitlab-dependency-proxy-test/*",

"arn:aws:s3:::or-gitlab-backups-test/*",

"arn:aws:s3:::or-gitlab-tmp-test/*"

]

},

{

"Action": [

"s3:ListBucket",

"s3:ListAllMyBuckets",

"s3:GetBucketLocation",

"s3:ListBucketMultipartUploads"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::or-gitlab-registry-test",

"arn:aws:s3:::or-gitlab-artifacts-test",

"arn:aws:s3:::or-git-lfs-test",

"arn:aws:s3:::or-gitlab-packages-test",

"arn:aws:s3:::or-gitlab-uploads-test",

"arn:aws:s3:::or-gitlab-mr-diffs-test",

"arn:aws:s3:::or-gitlab-terraform-state-test",

"arn:aws:s3:::or-gitlab-ci-secure-files-test",

"arn:aws:s3:::or-gitlab-dependency-proxy-test",

"arn:aws:s3:::or-gitlab-backups-test",

"arn:aws:s3:::or-gitlab-tmp-test"

]

}

],

"Version": "2012-10-17"

}Create an IAM Role — choose the Web identity:

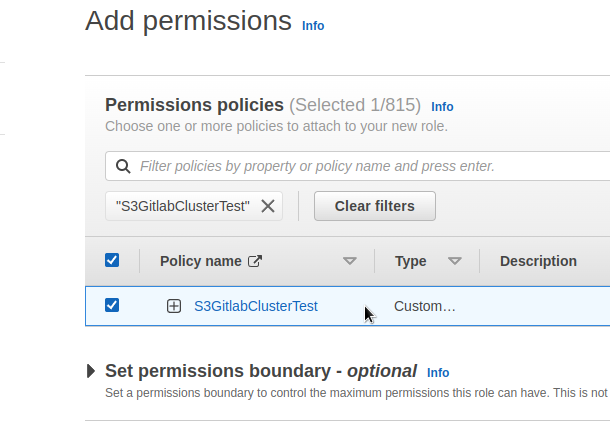

Connect the Policy created above:

Looks like that’s all? Now, we can write the values.

Gitlab Helm deployment

Creating values.yaml

All the default values can be found here>>>, and can be used as an example, but you should not completely copy them — write only settings that differ from the default.

global

hosts

Specify the domain using which the chart will set the values of Ingress and Services that will be created, as the result, we will get a set of domains of in the form of gitlab.internal.example.com, registry.internal.example.com, etc.

For SSH, a separate subdomain is specified for Ingress in the example, because a separate Service with a Network Load Balancer will be created to serve SSH via 22 TCP port.

The hostSuffix will add a suffix to the created records, i.e. the result will be subdomains of the form gitlab-test.internal.example.com and registry-test.internal.example.com.

But hostSuffix does not apply to the SSH URL, so we specify it with a suffix.

The result will look like the following:

global:

hosts:

domain: internal.example.com

hostSuffix: test

ssh: gitlab-shell-test.internal.example.comingress

Take the alb-full.yaml as an example, we can add only the load_balancing.algorithm.type=least_outstanding_requests annotation:

ingress:

# Common annotations used by kas, registry, and webservice

annotations:

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:eu-central-1:514***799:certificate/7227a8fa-1124-441c-81d7-ec168180190d

alb.ingress.kubernetes.io/group.name: gitlab

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS": 443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-group-attributes: load_balancing.algorithm.type=least_outstanding_request

alb.ingress.kubernetes.io/target-type: ip

kubernetes.io/ingress.class: alb

nginx.ingress.kubernetes.io/connection-proxy-header: "keep-alive"

class: none

configureCertmanager: false

enabled: true

path: /*

pathType: ImplementationSpecific

provider: aws

tls:

enabled: falseIf you use KAS, it will need a separate LoadBalancer, we will configure it a bit later in the gitlab.kas block.

psql

Find the name of the Service that was created during the deployment of the PostgreSQL cluster:

$ kk -n gitlab-cluster-test get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

devops-gitlab-cluster-test-psql ClusterIP 172.20.67.135 <none> 5432/TCP 63m

devops-gitlab-cluster-test-psql-config ClusterIP None <none> <none> 63m

devops-gitlab-cluster-test-psql-repl ClusterIP 172.20.165.249 <none> 5432/TCP 63mFor now, we will take the default postgress user from the secret:

$ kk -n gitlab-cluster-test get secret postgres.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do

NAME TYPE DATA AGE

postgres.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do Opaque 2 67mFor production, it will be necessary to make a separate one, because postgress == root.

Prepare the config:

psql:

host: devops-gitlab-cluster-test-psql

database: gitlabhq_test

username: postgres

password:

useSecret: true

secret: postgres.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do

key: passwordredis

Configure Redis chart-specific settings

Configure the GitLab chart with an external Redis

For now, let’s leave everything by default — Redis is used only for caching, will see how it will work and what it will be needed about resources.

registry

In globals for the registry we specify only the basket:

registry:

bucket: or-gitlab-registry-testgitaly

For now, let’s leave it as it is. Then we’ll think about whether we can take it to a dedicated WorkerNode.

It will be necessary to monitor how it uses resources, but in the case of our 150–200 users, it is unlikely that there will be a need to fine-tune it, or even more to deploy the Praefect cluster.

minio

Disalbe it, as we will use AWS S3:

minio:

enabled: falsegrafana

Let’s turn it on to see what’s in it (well, nothing :-) )

grafana:

enabled: trueappConfig

The list of baskets is in values.

The object_store needs to be configured - add a secret in which the provider and region of the buckets will be specified. Do not use ACCESS/SECRET keys here, as there will be a ServiceAccount.

Let’s take an example from, rails.s3.yaml, see the connection :

provider: AWS

region: eu-central-1Create the Secret:

$ kk -n gitlab-cluster-test create secret generic gitlab-rails-storage --from-file=connection=rails.s3.yamlAnd describe the appConfig like this:

appConfig:

enableUsagePing: false

enableSeatLink: true # disable?

object_store:

enabled: true

proxy_download: true

connection:

secret: gitlab-rails-storage

key: connection

artifacts:

bucket: or-gitlab-artifacts-test

lfs:

bucket: or-git-lfs-test

packages:

bucket: or-gitlab-packages-test

uploads:

bucket: or-gitlab-uploads-test

externalDiffs:

bucket: or-gitlab-mr-diffs-test

terraformState:

bucket: or-gitlab-terraform-state-test

ciSecureFiles:

bucket: or-gitlab-ci-secure-files-test

dependencyProxy:

bucket: or-gitlab-dependency-proxy-test

backups:

bucket: or-gitlab-backups-test

tmpBucket: or-gitlab-tmp-testserviceAccount

See Kubernetes: ServiceAccount from AWS IAM Role for Kubernetes Pod.

It seems to be possible without creating a ServiceAccount, simply through service annotations — IAM roles for AWS when using the GitLab chart.

But we will use ServiceAccount, the role with the policy has already been done — add it:

serviceAccount:

enabled: true

create: true

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::514***799:role/S3GitlabClusterTestSo, seems we are done with the globals.

registry

Defining the Registry Configuration

Here you need to configure the storage, which is stored in Secret, and in which you also need to specify the name of the bucket: the parameter globals.registry will be used for backups, and the parameter here - by the Registry service itself, see Docker Registry images.

For example, let’s take the file registry.s3.yaml, but without keys, because for the Registry, its own ServiceAccoumt with IAM Role will be created:

s3:

bucket: or-gitlab-registry-test

region: eu-central-1

v4auth: trueCreate the Secret:

$ kk -n gitlab-cluster-test create secret generic registry-storage --from-file=config=registry.s3.yamlDescribe the configuration:

registry:

enabled: true

service:

type: NodePort

storage:

secret: registry-storage

key: configgitlab - Services

Describe Services for kas, webservice, and gitlab-shell in a dedicated gitlab block, from the same example alb-full.yaml.

For the gitlab-shell Service, in the annotation external-dns.alpha.kubernetes.io/hostname specify the hostname:

gitlab:

kas:

enabled: true

ingress:

# Specific annotations needed for kas service to support websockets

annotations:

alb.ingress.kubernetes.io/healthcheck-path: /liveness

alb.ingress.kubernetes.io/healthcheck-port: "8151"

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=4000,routing.http2.enabled=false

alb.ingress.kubernetes.io/target-group-attributes: stickiness.enabled=true,stickiness.lb_cookie.duration_seconds=86400

alb.ingress.kubernetes.io/target-type: ip

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/connection-proxy-header: "keep-alive"

nginx.ingress.kubernetes.io/x-forwarded-prefix: "/path"

# k8s services exposed via an ingress rule to an ELB need to be of type NodePort

service:

type: NodePort

webservice:

enabled: true

service:

type: NodePort

# gitlab-shell (ssh) needs an NLB

gitlab-shell:

enabled: true

service:

annotations:

external-dns.alpha.kubernetes.io/hostname: "gitlab-shell-test.internal.example.com"

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: "ip"

service.beta.kubernetes.io/aws-load-balancer-scheme: "internet-facing"

service.beta.kubernetes.io/aws-load-balancer-type: "external"

type: LoadBalancerOther

Disable unnecessary services:

certmanager:

install: false

postgresql:

install: false

gitlab-runner:

install: false

nginx-ingress:

enabled: trueThe full values.yaml

As a result, we get the following configuration:

global:

hosts:

domain: internal.example.com

hostSuffix: test

ssh: gitlab-shell-test.internal.example.com

ingress:

# Common annotations used by kas, registry, and webservice

annotations:

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:eu-central-1:514***799:certificate/7227a8fa-1124-441c-81d7-ec168180190d

alb.ingress.kubernetes.io/group.name: gitlab

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS": 443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-group-attributes: load_balancing.algorithm.type=least_outstanding_requests

alb.ingress.kubernetes.io/target-type: ip

kubernetes.io/ingress.class: alb

nginx.ingress.kubernetes.io/connection-proxy-header: "keep-alive"

class: none

configureCertmanager: false

enabled: true

path: /*

pathType: ImplementationSpecific

provider: aws

tls:

enabled: false

psql:

host: devops-gitlab-cluster-test-psql

database: gitlabhq_test

username: postgres

password:

useSecret: true

secret: postgres.devops-gitlab-cluster-test-psql.credentials.postgresql.acid.zalan.do

key: password

registry:

bucket: or-gitlab-registry-test

minio:

enabled: false

grafana:

enabled: true

appConfig:

enableUsagePing: false

enableSeatLink: true # disable?

object_store:

enabled: true

proxy_download: true

connection:

secret: gitlab-rails-storage

key: connection

artifacts:

bucket: or-gitlab-artifacts-test

lfs:

bucket: or-git-lfs-test

packages:

bucket: or-gitlab-packages-test

uploads:

bucket: or-gitlab-uploads-test

externalDiffs:

bucket: or-gitlab-mr-diffs-test

terraformState:

bucket: or-gitlab-terraform-state-test

ciSecureFiles:

bucket: or-gitlab-ci-secure-files-test

dependencyProxy:

bucket: or-gitlab-dependency-proxy-test

backups:

bucket: or-gitlab-backups-test

tmpBucket: or-gitlab-tmp-test

serviceAccount:

enabled: true

create: true

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::514***799:role/S3GitlabClusterTest

common:

labels:

environment: test

gitlab:

kas:

enabled: true

ingress:

# Specific annotations needed for kas service to support websockets

annotations:

alb.ingress.kubernetes.io/healthcheck-path: /liveness

alb.ingress.kubernetes.io/healthcheck-port: "8151"

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=4000,routing.http2.enabled=false

alb.ingress.kubernetes.io/target-group-attributes: stickiness.enabled=true,stickiness.lb_cookie.duration_seconds=86400

alb.ingress.kubernetes.io/target-type: ip

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/connection-proxy-header: "keep-alive"

nginx.ingress.kubernetes.io/x-forwarded-prefix: "/path"

# k8s services exposed via an ingress rule to an ELB need to be of type NodePort

service:

type: NodePort

webservice:

enabled: true

service:

type: NodePort

# gitlab-shell (ssh) needs an NLB

gitlab-shell:

enabled: true

service:

annotations:

external-dns.alpha.kubernetes.io/hostname: "gitlab-shell-test.internal.example.com"

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: "ip"

service.beta.kubernetes.io/aws-load-balancer-scheme: "internet-facing"

service.beta.kubernetes.io/aws-load-balancer-type: "external"

type: LoadBalancer

registry:

enabled: true

service:

type: NodePort

storage:

secret: registry-storage

key: config

certmanager:

install: false

postgresql:

install: false

gitlab-runner:

install: false

nginx-ingress:

enabled: falseGitlab deploy

Well, that’s all? Let’s deploy:

$ helm repo add gitlab https://charts.gitlab.io/

$ helm repo update

$ helm upgrade --install --namespace gitlab-cluster-test gitlab gitlab/gitlab --timeout 600s -f gitlab-cluster-test-values.yamlCheck Ingresses:

$ kk -n gitlab-cluster-test get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

gitlab-grafana-app <none> gitlab-test.internal.example.com k8s-***.eu-central-1.elb.amazonaws.com 80 117s

gitlab-kas <none> kas-test.internal.example.com k8s-***.eu-central-1.elb.amazonaws.com 80 117s

gitlab-registry <none> registry-test.internal.example.com k8s-***.eu-central-1.elb.amazonaws.com 80 117s

gitlab-webservice-default <none> gitlab-test.internal.example.com k8s-***.eu-central-1.elb.amazonaws.com 80 117sOpen the https://gitlab-test.internal.example.com URL:

Get the root password:

$ kubectl -n gitlab-cluster-test get secret gitlab-gitlab-initial-root-password -ojsonpath=’{.data.password}’ | base64 — decode ; echo

cV6***y1tLog in under the root:

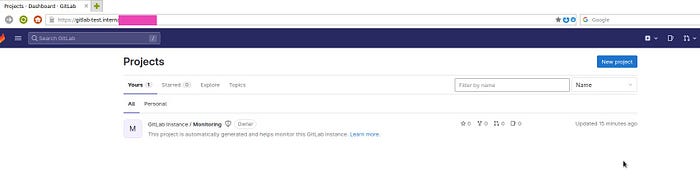

Deployment check

Repository

Check if the repository is working — the Gitaly and GitLab Shell services.

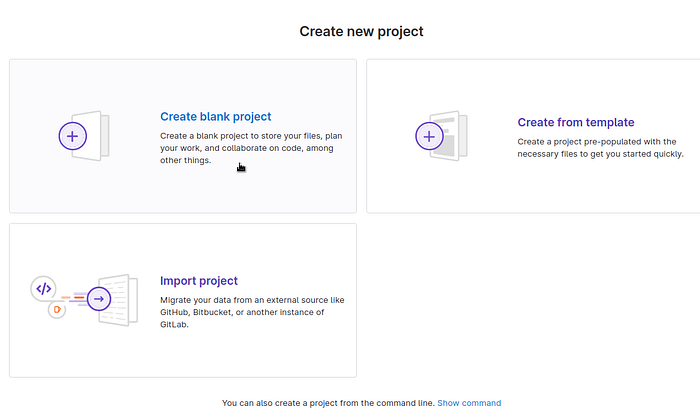

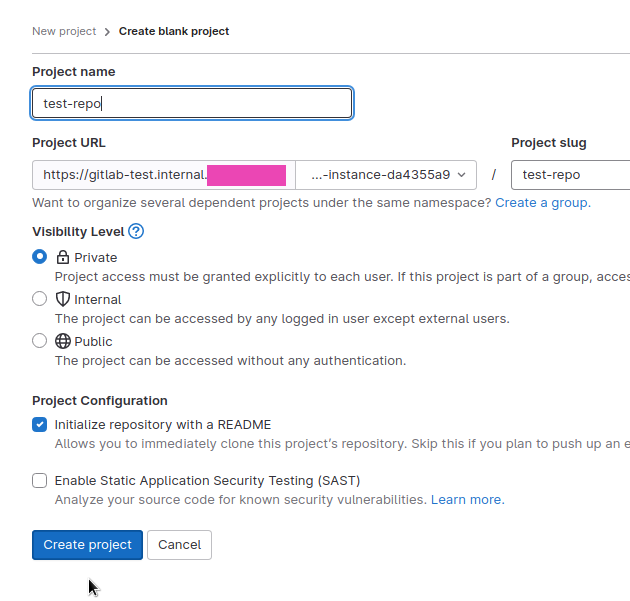

Create a test repository:

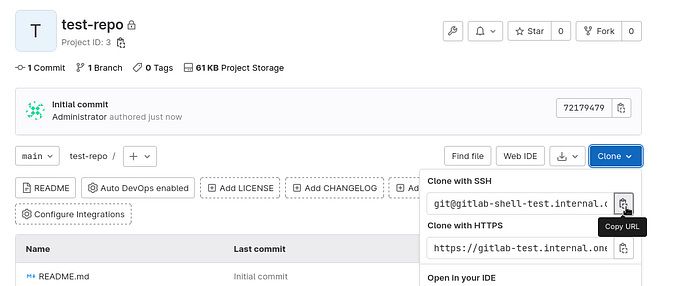

Copy the address — with the subdomain gitlab-shell-test.internal.example.com, which was specified in the values:

Clone it:

$ git clone git@gitlab-shell-test.internal.example.com:gitlab-instance-da4355a9/test-repo.git

Cloning into ‘test-repo’…

The authenticity of host ‘gitlab-shell-test.internal.example.com (3.***.***.79)’ can’t be established.

ED25519 key fingerprint is SHA256:xhC1Q/lduNbg49kGljYUb21YlBBsxrG89xE+iCHD+xc.

This key is not known by any other names.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added ‘gitlab-shell-test.internal.example.com’ (ED25519) to the list of known hosts.

remote: Enumerating objects: 3, done.

remote: Counting objects: 100% (3/3), done.

remote: Compressing objects: 100% (2/2), done.

remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0

Receiving objects: 100% (3/3), done.And the content:

$ ll test-repo/

total 8

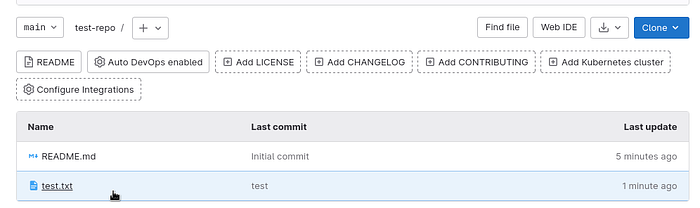

-rw-r — r — 1 setevoy setevoy 6274 Feb 4 13:24 README.mdLet’s try to push some changes back:

$ cd test-repo/

$ echo test > test.txt

$ git add test.txt

$ git commit -m “test”

$ git push

Enumerating objects: 4, done.

Counting objects: 100% (4/4), done.

Delta compression using up to 16 threads

Compressing objects: 100% (2/2), done.

Writing objects: 100% (3/3), 285 bytes | 285.00 KiB/s, done.

Total 3 (delta 0), reused 0 (delta 0), pack-reused 0

To gitlab-shell-test.internal.example.com:gitlab-instance-da4355a9/test-repo.git

7217947..4eb84db main -> mainAnd in the WebUI:

Okay, it works.

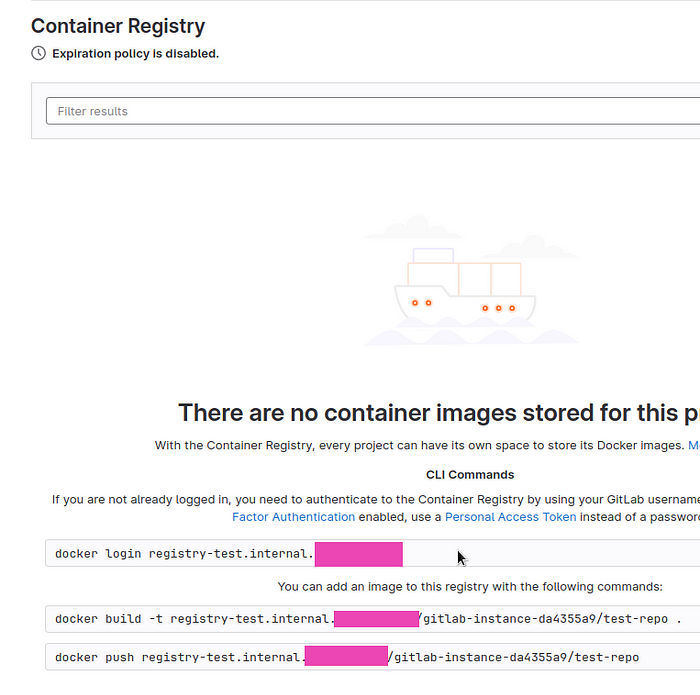

Container Registry

Next, let’s check the Registry — there are examples of commands in the web interface > Container Registry:

Log in with the same login:password that were used to log in to the GitLab web interface (user root, password from the gitlab-gitlab-initial-root-password Secret):

$ docker login registry-test.internal.example.com

Username: root

Password:

…

Login SucceededCreate a Dockerfile:

FROM busybox

RUN echo "hello world"Build the image, tag it with the name of our Registry:

$ docker build -t registry-test.internal.example.com/gitlab-instance-da4355a9/test-repo:1 .And push it:

$ docker push registry-test.internal.example.com/gitlab-instance-da4355a9/test-repo:1

The push refers to repository [registry-test.internal.example.com/gitlab-instance-da4355a9/test-repo]

b64792c17e4a: Mounted from gitlab-instance-da4355a9/test

1: digest: sha256:eb45a54c2c0e3edbd6732b454c8f8691ad412b56dd10d777142ca4624e223c69 size: 528Checking the or-gitlab-registry-test S3 bucket:

$ aws --profile internal s3 ls or-gitlab-registry-test/docker/registry/v2/repositories/gitlab-instance-da4355a9/test-repo/_manifests/tags/1/

PRE current/

PRE index/Okay — the Registry works, there is access to S3, and there are no problems with the ServiceAccount.

ServiceAccounts, and S3 access

But just in case, let’s check ServiceAccounts and access to S3 in other services, for example from the Toolbox Pod:

$ kk -n gitlab-cluster-test exec -ti gitlab-toolbox-565889b874-vgqcb — bash

git@gitlab-toolbox-565889b874-vgqcb:/$ aws s3 ls or-gitlab-registry-test

PRE docker/Okay — everything works here too.

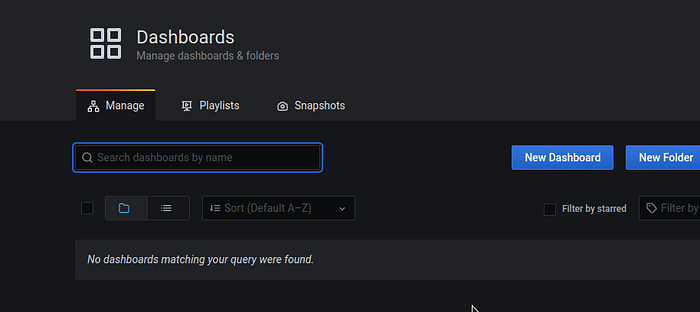

Grafana

Let’s check Grafana — https://gitlab-test.internal.example.com/-/grafana/.

Login root, the password from the Secret:

$ kubectl -n gitlab-cluster-test get secret gitlab-grafana-initial-password -ojsonpath=’{.data.password}’ | base64 — decode ; echo

Cqc***wFS

Works too. Dashboards need to be connected separately, we will deal with it later, for now, see Grafana JSON Dashboards.

In general, that’s all.

Let’s see what’s next — administration, project migration, monitoring.

Originally published at RTFM: Linux, DevOps, and system administration.